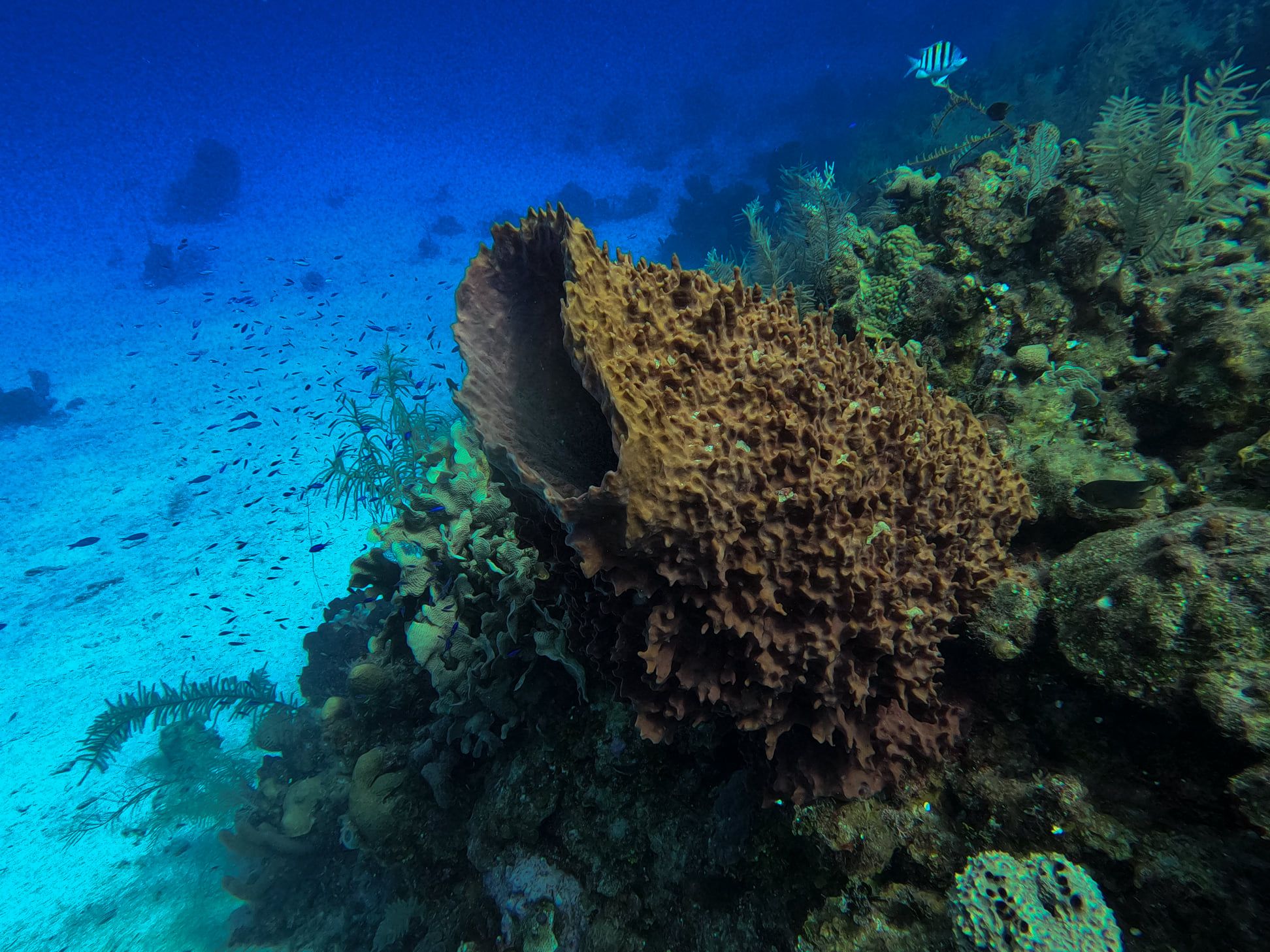

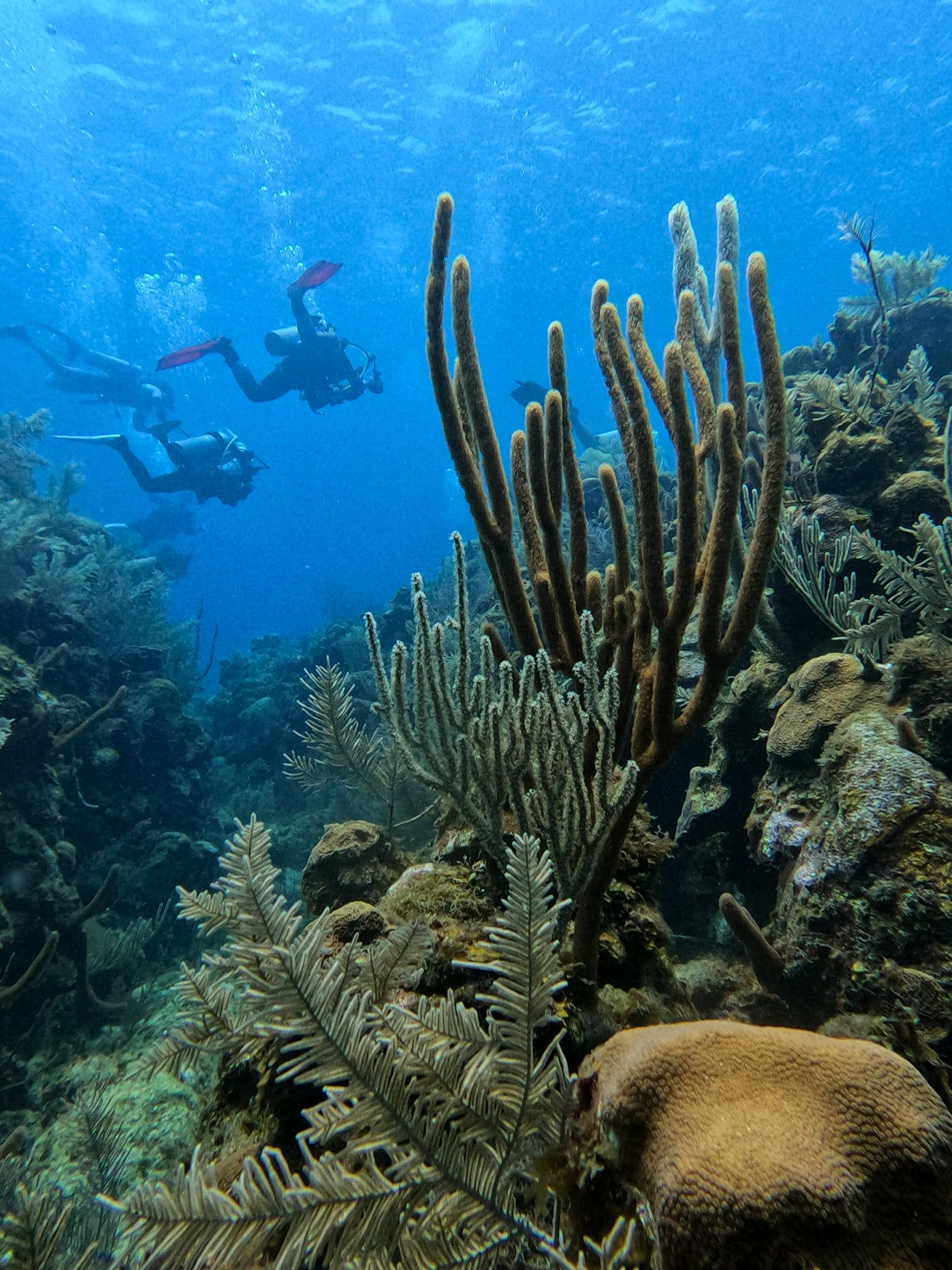

Roatan is an incredible place to scuba dive, with some of the best reefs in the Western Hemisphere. The reef terrain is complex and very three-dimensional, with deep canyons, caverns, and swimthroughs, and there's a richness of both sea life and coral species. Here's what it looked like:

Coincidentally, I got access to DALL-E 2 while I was there last week. I started wondering, "Could I use DALL-E 2 to create a fake vacation? Or, more ethically, could I use DALL-E 2 to recreate events from my vacation that actually happened, but I was unable to get a good photo of them?" I started using their interface to generate some synthetic vacation photos, and indeed a good portion of them were good enough to pass off as the real thing.

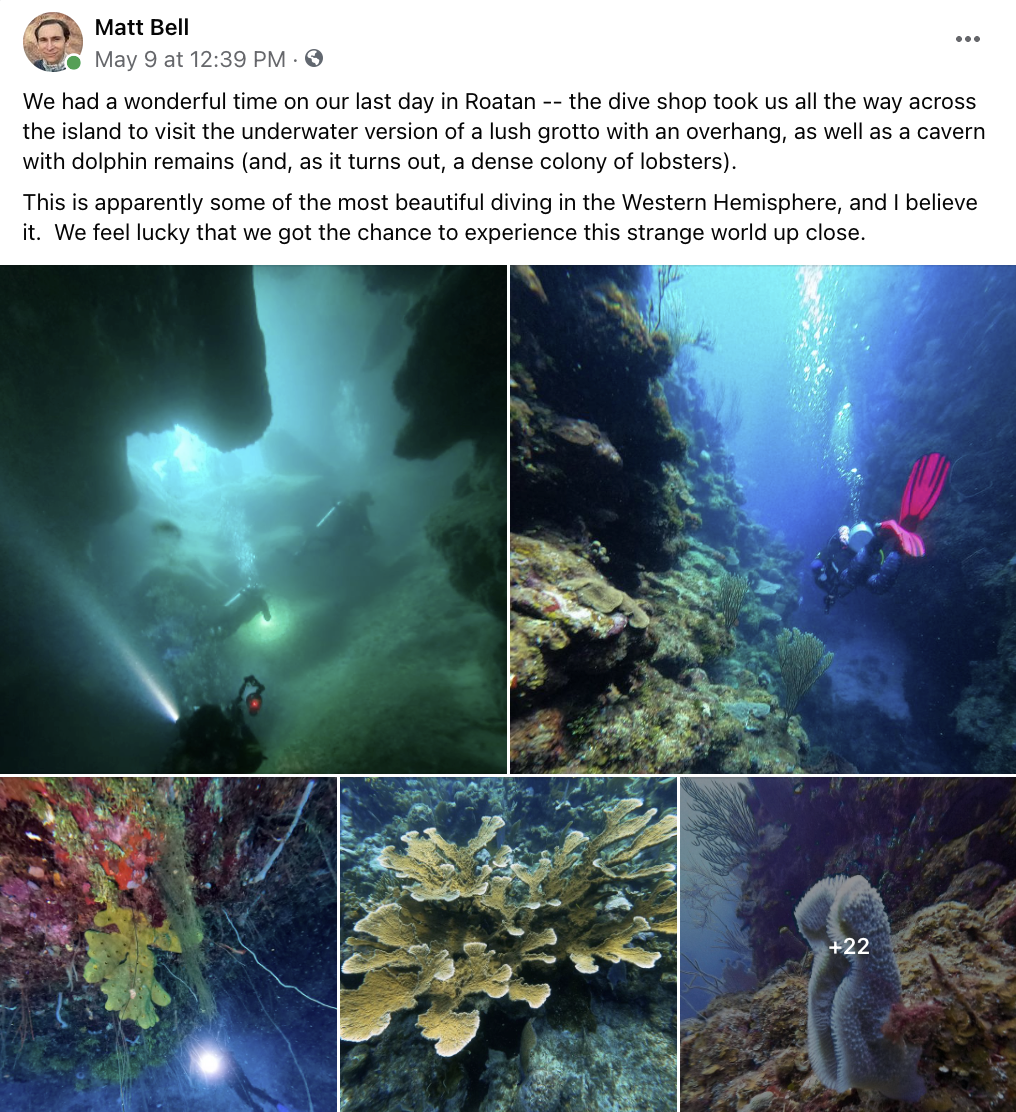

I decided to test out the quality of these synthetic images by intermixing them into a Facebook post with real images. The post started out like this:

Users scrolled through the photos one by one, either in a slide reel on the web interface or in a column on the mobile interface. There were 22 real photos, four synthetic ones, and one final image that revealed the experiment. The synthetic ones all came after the real ones.

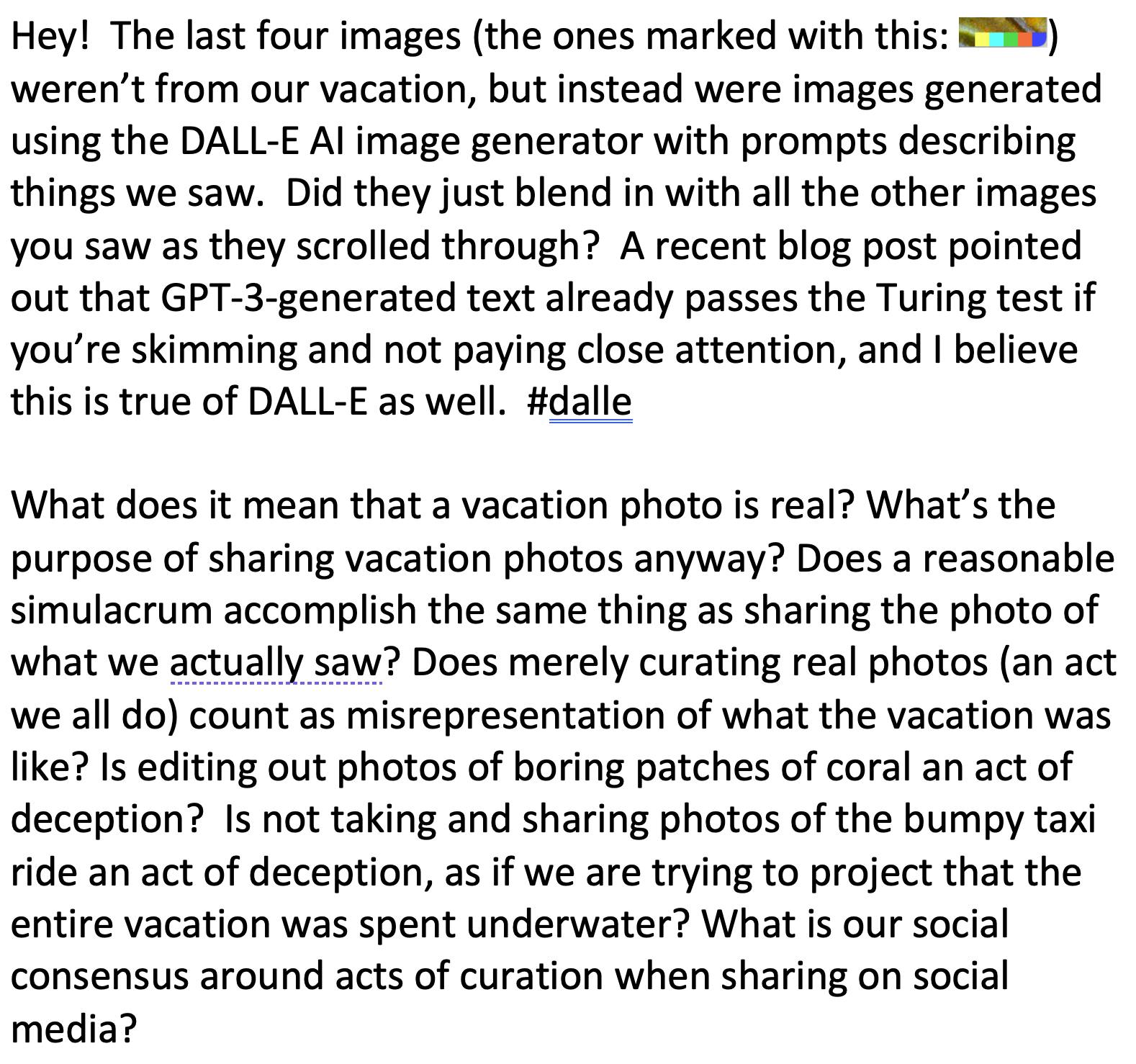

The reveal:

An incredible 83% of the people (19 of 23) who answered the survey at the end missed the fact that there was something different about the DALL-E images. This worked despite the fact that the telltale DALL-E watermark was in the lower right corner (to comply with OpenAI'a access rules), and the fact that the images were a different aspect ratio and more grainy. My friend group is also a relatively sophisticated audience that is well aware of the existence of DALL-E and deepfakes.

Here's what I think is going on:

- Our brains are partly on autopilot when we're browsing social media, and scrutinizing images takes extra mental labor. There's a great blog post I read a while back that pointed out that GPT-3-generated text already passes the Turing Test for users who are skimming the text and not closely paying attention. I believe something similar is taking place here, where we go through image galleries at a rate of one every couple of seconds, and it's actually hard to detect the subtle signs of deepfakery in a couple of seconds even if you are paying close attention.

- It's likely that with a harder version of the Turing Test, in which real and fake images of the same content are presented side by side and people are told that one of them is fake, it would be much easier to detect the fake images.

- A Facebook post shared among friends is a high-trust low-stakes environment, so people aren't in a headspace to scrutinize the images. If you look closely at some of the DALL-E images, you can see some obvious telltale signs of fakery. There are scuba hoses to nowhere in the fake diver photo, and the fins sort of blur together. (I did instruct the algorithm to use pink fins to match the ones Helen wears in real life, and I was pleased it executed well on that.)

- As one Facebook commenter pointed out, undersea environments are alien enough that most people don't have good subconscious priors for detecting fakery. That said, there were plenty of avid scuba divers who were fooled.

- We're not yet well-tuned enough at detecting deepfakes, but this will come with time. I recall watching The Fifth Element (1997) in the theater as a kid and being wowed but the Future Manhattan CGI, but now it just looks like something a college student would do in an Intro to Computer Animation course. The difference is that our brains are now trained to detect the telltale signs of computer animation. However, deepfakes will get better over time, just as computer animation did.

- All that being said, DALL-E 2 is really good, good enough that it could produce useable stock images for a range of applications. It still struggles with faces, as well as complex scenes where the prompt has several adjectives attached to several nouns, but it can produce photorealistic imagery or stylized art that's usable much of the time given some human curation. It seems that these networks are doing a variant of "guessing the teacher's password" instead of truly understanding what they're drawing, and over time these failures could be addressed through greater use of 3D training data so the networks start to learn the real shape and functional connectivity of objects instead of just what a range of 2D views of them look like.

While I am concerned about the effect of deepfakes on, say, politics, the last few years in America have proven that you don't need deepfakes to deceive people; all you need is a bald-faced lie that people want to believc, and if you repeat it enough you can convince millions of people of your lie despite overwhelming logic and evidence to the contrary.

Meanwhile, the frontier for expanding people's visual creative abilities is wide open, and I'm excited to see what the next few years will bring. I think generative image systems won't obsolete artists any more than Photoshop did; they're simply another tool for amplifying the connection between imagination and a physical manifestation of it, and imagination is still the scarce commodity that good artists possess.